Back in the days, we had a monolithic application running on a heavy VM. We knew everything about it, where it was running, its IP address , which TCP/UDP port was it listening to, its status and health…etc. When we wanted to find that app, we knew where to find it. We even gave it a static IP address and advertised its DNS name for other apps to refer to it. But things have changed, and the the monolith is now a bunch of microservices running in Docker containers.

The new microservices architecture has quite the advantages: flexibility, portability, agility, and scalability to name a few. But it also introduces some challenges ( especially for the operations teams used to the monolithic approach). One of which is stochasticity. There is a notion of randomness/chaos when deploying microservices(especially on a cluster of nodes e.g Swarm) as some attributes that were well-defined in the old days become unknown or hard to find with the new architecture.

In this article, I will describe how the challenge of microservices stochasticity can be addressed with a service-discovery service called Interlock. I will also describe how to scale these microservices by combining Interlock with Docker Compose and an HAProxy/NGINX service.

Let’s start with a simple app composed of two microservices : web (Python) and db(Mongo). Each of these microservices does one and only one thing. The web microservice receives http requests and responds to them. The db microservice saves data persistently and provides it upon the web microservice’s request. These two microservices are described in a docker-compose.yml file. If you’re not familiar with Docker Compose, please visit this site to learn more.

# Mongo DB

db:

image: mongo

expose:

- 27017

command: --smallfiles

# Python App

web:

build: .

ports:

- "5000:5000"

links:

- db:db

By installing Docker Compose and running this docker-compose.yml file, we can run this app on a single Docker engine or on a Docker Swarm cluster. In this example, I will be running this app on a Swarm cluster. Docker Compose will take care of building/pulling the images and spinning the two containers on one of the Swarm nodes. Note: since these two services are linked, Swarm will ensure they get placed on the same node.

dockchat#docker-compose up -d

Recreating dockchat_db_1...

Recreating dockchat_web_1...

dockchat#docker-compose ps

Name Command State Ports

--------------------------------------------------

dockchat_db_1 /entrypoint.sh --smallfiles Up 27017/tcp

dockchat_web_1 python webapp.py Up 10.0.0.80:32829->5000/tcp

Now let’s use the awesome “scale” feature that Docker Compose has. This feature creates new containers from the same service description. In this case, let’s try to scale our web service to 5.

#docker-compose scale web=5

Creating dockchat_web_2...

Creating dockchat_web_3...

Creating dockchat_web_4...

Creating dockchat_web_5...

Starting dockchat_web_2...

Starting dockchat_web_3...

Starting dockchat_web_4...

Starting dockchat_web_5...

#docker-compose ps

Name Command State Ports

---------------------------------------------------------------

dockchat_db_1 /entrypoint.sh --smallfiles Up 27017/tcp

dockchat_web_1 python webapp.py Up 10.0.0.80:32829->5000/tcp

dockchat_web_2 python webapp.py Up 10.0.0.80:32830->5000/tcp

dockchat_web_3 python webapp.py Up 10.0.0.80:32831->5000/tcp

dockchat_web_4 python webapp.py Up 10.0.0.80:32832->5000/tcp

dockchat_web_5 python webapp.py Up 10.0.0.80:32833->5000/tcpLooks good so far! The app is up on one of the Swarm nodes, but how can we access it ? Which node/IP is it running on ? What happens if we re-deploy this app ? Are all the web containers being utilized ?

All of these are valid questions, and they highlight the challenge of stochasticity that I described earlier. There needs to be a better way to access these microservices without the need to do any manual search for their deployment attributes such as IP, port, node, and so on. We need a dynamic , event-driven system to discover these services and register their attributes for other services to use, and that’s exactly what Interlock does.

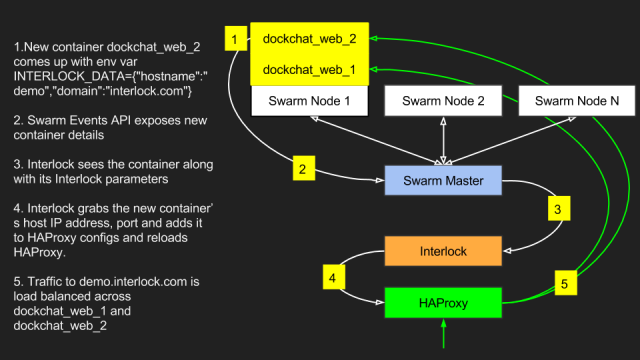

Interlock is a service that detects new microservice containers when they come up/go down and register/removes them from with other services that need to know about these new services. An example would be a load-balancing service such as HAProxy or NGINX. Interlock supports both NGINX and HAProxy. But anyone can write new plugin for their preferred service.

Let’s pick from where we left off, and see how Interlock can solve this problem. In this example, I’m using the HAProxy plugin( you can easily substitute in NGINX instead). I added a new service in the docker-compose.yml file called ‘interlock’ with the following parameters:

# Interlock/HAProxy

interlock:

image: ehazlett/interlock:latest

ports:

- "80:80"

volumes:

- /var/lib/docker:/etc/docker

command: "--swarm-url tcp://<SWARM_MASTER_IP>:3375 --debug --plugin haproxy start"

# DB

db:

image: mongo

expose:

- 27017

command: --smallfiles

# Web

web:

build: .

environment:

- INTERLOCK_DATA={"hostname":"demo","domain":"interlock.com"}

ports:

- "5000"

links:

- db:dbMain thing to note here is that Inerlock is launched like any other service in a container. I also needed to add INERLOCK_DATA as an env var in web service container. INTERLOCK_DATA will tell Interlock how to register this new service.

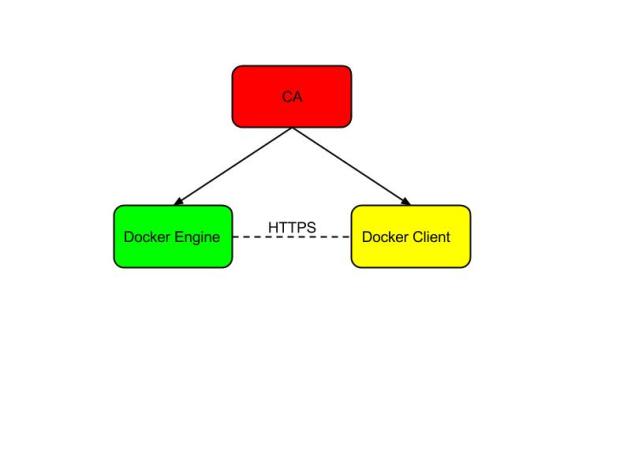

One of the great features of Docker is the Events API. From the Docker Client, ‘docker events’ will show all events happening with the specific Docker Engine or Docker Swarm cluster. Many projects out there today utilize the events API for container operations’ visibility and monitoring. Similarly, Interlock uses the events API to discover when containers come up or go down. It then gets some attributes about the containers such as service name, hostname, host IP and port. This info is then used to create new config for HAProxy/NGINX. The following diagram and log outputs show how Interlock works:

dockchat-interlock# docker-compose up -d

Creating dockchatinterlock_db_1...

Creating dockchatinterlock_web_1...

Creating dockchatinterlock_interlock_1...

dockchat-interlock# docker-compose scale web=2

Creating dockchatinterlock_web_2...

Starting dockchatinterlock_web_2...

#docker logs dockchatinterlock_interlock_1

time="2015-09-19T20:44:32Z" level="debug" msg="[interlock] event: date=1442695472 type=create image=dockchatinterlock_web node:ip-10-0-0-80 container=dab9acc32f274710e0a2dc17d2546f1735993cd1b9b1e5354bd7e1a9a27ce83a"

time="2015-09-19T20:44:32Z" level="info" msg="[interlock] dispatching event to plugin: name=haproxy version=0.1"

time="2015-09-19T20:44:32Z" level="debug" msg="[interlock] event: date=1442695472 type=start image=dockchatinterlock_web node:ip-10-0-0-80 container=dab9acc32f274710e0a2dc17d2546f1735993cd1b9b1e5354bd7e1a9a27ce83a"

time="2015-09-19T20:44:32Z" level="info" msg="[interlock] dispatching event to plugin: name=haproxy version=0.1"

time="2015-09-19T20:44:32Z" level="debug" msg="[haproxy] update request received"

time="2015-09-19T20:44:32Z" level="debug" msg="[haproxy] generating proxy config"

time="2015-09-19T20:44:32Z" level="info" msg="[haproxy] demo.interlock.com: upstream=10.0.0.80:32840 container=dockchatinterlock_web_2"

time="2015-09-19T20:44:32Z" level="info" msg="[haproxy] demo.interlock.com: upstream=10.0.0.80:32839 container=dockchatinterlock_web_1"

time="2015-09-19T20:44:32Z" level="debug" msg="[haproxy] adding host name=demo_interlock_com domain=demo.interlock.com"

time="2015-09-19T20:44:32Z" level="debug" msg="[haproxy] jobs: 0"

time="2015-09-19T20:44:32Z" level="debug" msg="[haproxy] reload triggered"

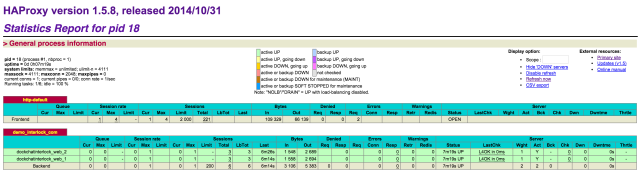

time="2015-09-19T20:44:32Z" level="info" msg="[haproxy] proxy reloaded and ready"We can see that when we scaled the web app, Interlock detected the new services and registered them in HAProxy. To verify which services belong to demo.interlock.com, we can look at HAProxy stats page (demo.interlock.com/haproxy?stats). Another point to note here is that a single Interlock service can register multiple sites automatically. The moment a new container comes up with a different INTERLOCK_DATA hostname data, Interlock will add the new config in HAProxy. Pretty neat!

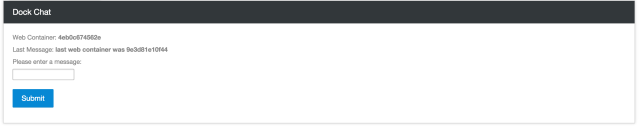

Finally, we can see from the stats page and from the web service itself that traffic hitting demo.interlock.com was load-balanced across these new containers as can be seen here:

Note: to access the site from you browser, make sure you add an /etc/hosts entry for demo.interlock.com with Interlock container’s host IP address.

Conclusion: In this article, I went over the challenge of stochasticity when deploying microservice containers on a cluster of nodes. I described how Interlock can be used to address this challenge by dynamically discovering these services and registering them with other services like HAProxy. Finally, I demonstrated how combining Interlock with an HAProxy can ease scaling your applications on a Swarm cluster.

Source repo can be found here.

Special thanks to Evan Hazlett for creating Interlock.